概述

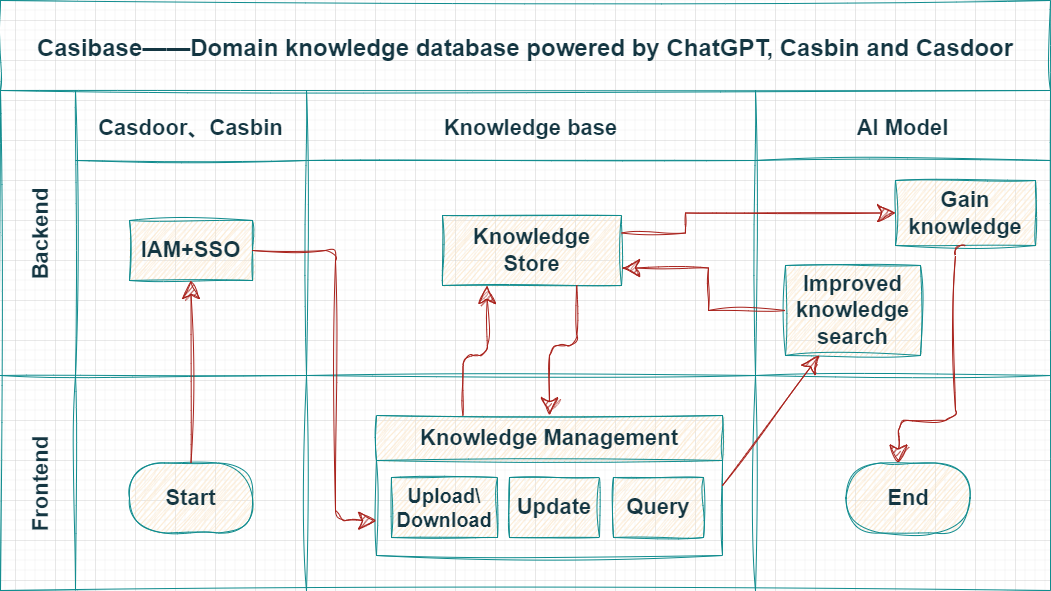

Casibase 是一个由 ChatGPT 驱动的开源 领域知识 数据库、即时通讯和论坛软件。

Casibase 特点

采用 Golang 开发的前后端分离架构,Casibase 支持高并发,提供基于 Web 的管理界面,并支持多语言(中文、英文)。

Casibase 支持第三方应用登录,如 GitHub、Google、QQ、微信等,并支持通过插件扩展第三方登录。

基于嵌入和提示工程进行知识管理,Casibase 支持自定义嵌入方法和语言模型。

Casibase 通过数据库同步支持与现有系统集成,使用户可以平滑过渡到 Casibase。

Casibase 支持主流数据库:MySQL、PostgreSQL、SQL Server 等,并支持通过插件扩展新的数据库。

Casibase 是一个强大的资产管理工具,可以通过 RDP、VNC 和 SSH 协议轻松连接资产,并高效处理机器的远程连接。

Casibase 的安全日志审计功能允许你轻松跟踪和监控远程连接,详细记录连接开始时间、持续时间和其他相关详情,同时还能捕获和分析 Casdoor 操作的 API 日志,增强安全性和操作透明度。

Casibase 支持数据库管理。 Casibase 支持数据库管理。Casibase 的数据库管理功能允许你轻松连接、管理和组织数据库,同时控制访问权限,简化数据库资源的用户管理和授权。

工作原理

步骤 0(预备知识)

Casibase 的知识检索过程基于嵌入和提示工程,因此强烈建议您简要了解嵌入如何工作。 嵌入的 简介 。

步骤 1(导入知识)

要开始使用 Casibase,用户需要按照以下步骤导入知识并创建特定领域的知识数据库:

配置存储: 在 Casibase 仪表板中,用户首先应该配置存储设置。这涉及指定用于存储知识相关文件(如文档、图像或任何其他相关数据)的存储系统。用户可以根据其偏好和需求从多种存储选项中选择。 这涉及指定用于存储知识相关文件(例如文档、图像或其它相关数据)的存储系统。 用户可以根据他们的偏好和需求从多种存储选项中进行选择。

上传文件到存储: 一旦设置好存储,用户就可以将包含特定领域知识的文件上传到配置好的存储系统中。这些文件可以是各种格式,如文本文档、图像或结构化数据文件(如 CSV 或 JSON)。 这些文件可以采用各种格式,例如文本文档、图像,或 CSV、JSON 等结构化数据文件。

选择知识生成的嵌入方法: 文件上传后,用户可以选择用于生成知识和相应向量的嵌入方法。嵌入是文本或视觉内容的数值表示,有助于高效的相似度搜索和数据分析。 嵌入是文本或视觉内容的数字表示,有助于高效的相似性搜索和数据分析。

知识是如何嵌入的?

对于文本数据:用户可以选择各种嵌入方法,如 Word2Vec、GloVe 或 BERT,将文本知识转换为有意义的向量。

对于视觉数据:如果上传的文件包含图像或视觉内容,用户可以选择基于 CNN 的特征提取等图像嵌入技术来创建代表性向量。

更多方法即将推出...

通过遵循这些步骤,用户可以用相关信息和相应的嵌入来填充他们的领域知识数据库,这些将用于在 Casibase 中进行有效的搜索、聚类和知识检索。嵌入过程使系统能够理解不同知识片段之间的上下文和关系,实现更高效和有见地的知识管理和探索。 嵌入过程使系统能够理解不同知识之间的上下文和关系,从而实现更高效和富有洞察力的知识管理与探索。

步骤 2(检索知识)

在导入你的 领域知识 后,Casibase 将其转换为 向量 并将这些向量存储在 向量数据库 中。这种向量表示启用了强大的功能,如 相似度搜索 和 相关信息的高效检索。你可以基于上下文或内容快速找到相关数据,实现高级查询并在你的领域知识中发现有价值的见解。 这种向量表示使得诸如 相似性搜索 和 高效检索相关信息 之类的强大功能成为可能。 您可以根据上下文或内容迅速找到相关数据,从而实现高级查询并在您的领域知识中发现有价值的洞见。

步骤 3(构建提示)

Casibase 对存储的知识向量执行相似度搜索,以找到与用户查询最接近的匹配。使用搜索结果,它为 语言模型 创建一个 提示模板 来构建特定问题。这确保了基于 Casibase 中的领域知识提供准确和上下文相关的响应。 利用搜索结果,它创建了一个 提示模板 来构建针对 语言模型 的具体问题。 这确保了准确且符合上下文的响应,基于 Casibase 的领域知识提供全面答案。

步骤 4(实现目标)

在此阶段,通过使用 Casibase,您已成功获取所需的知识。 在这个阶段,使用 Casibase,你已经成功获取了所需的知识。通过创新地将领域知识转换为向量并结合 ChatGPT 等强大的语言模型,Casibase 为你的查询提供准确和相关的响应。这使你能够高效地访问和利用存储在 Casibase 中的特定领域信息,轻松满足你的知识需求。 这使您能高效访问和利用存储在 Casibase 中的特定领域信息,从容满足您的知识需求。

步骤 5(可选的微调)

如果你发现结果不完全令人满意,你可以通过以下方式尝试获得更好的结果:

调整语言模型参数

提出多个问题

优化原始文件

通过利用这些微调选项,你可以提高在 Casibase 中的知识管理效率,确保系统更好地与你的目标保持一致,并提供更准确和有见地的信息。

其他优化结果的方法(可能需要源代码更改):

更新

嵌入结果:通过调整领域知识的嵌入结果来改进知识表示。修改

提示模板:通过自定义提示,你可以从语言模型获得更精确的响应。探索不同的

语言模型:尝试不同的模型,找到最适合你的响应生成需求的模型。

在线演示

只读站点(任何修改操作都会失败)

- 聊天机器人 (https://ai.casibase.com)

- 管理界面 (https://ai-admin.casibase.com)

可写站点(原始数据每 5 分钟恢复一次)

- 聊天机器人 (https://demo.casibase.com)

- 管理界面 (https://demo-admin.casibase.com)

全局管理员登录:

- 用户名:

admin - 密码:

123

架构

Casibase 包含 2 个部分:

| 名称 | 描述 | 语言 | 源代码 |

|---|---|---|---|

| 前端 | Casibase 应用程序的用户界面 | JavaScript + React | https://github.com/casibase/casibase/tree/master/web |

| 后端 | Casibase 的服务器端逻辑和 API | Golang + Beego + MySQL | https://github.com/casibase/casibase |

支持的模型

语言模型

| 模型 | 子类型 | 链接 |

|---|---|---|

| OpenAI | gpt-4-32k-0613,gpt-4-32k-0314,gpt-4-32k,gpt-4-0613,gpt-4-0314,gpt-4,gpt-3.5-turbo-0613,gpt-3.5-turbo-0301,gpt-3.5-turbo-16k,gpt-3.5-turbo-16k-0613,gpt-3.5-turbo,text-davinci-003,text-davinci-002,text-curie-001,text-babbage-001,text-ada-001,text-davinci-001,davinci-instruct-beta,davinci,curie-instruct-beta,curie,ada,babbage | OpenAI |

| Hugging Face | meta-llama/Llama-2-7b, tiiuae/falcon-180B, bigscience/bloom, gpt2, baichuan-inc/Baichuan2-13B-Chat, THUDM/chatglm2-6b | Hugging Face |

| Claude | claude-2, claude-v1, claude-v1-100k, claude-instant-v1, claude-instant-v1-100k, claude-v1.3, claude-v1.3-100k, claude-v1.2, claude-v1.0, claude-instant-v1.1, claude-instant-v1.1-100k, claude-instant-v1.0 | Claude |

| OpenRouter | google/palm-2-codechat-bison, google/palm-2-chat-bison, openai/gpt-3.5-turbo, openai/gpt-3.5-turbo-16k, openai/gpt-4, openai/gpt-4-32k, anthropic/claude-2, anthropic/claude-instant-v1, meta-llama/llama-2-13b-chat, meta-llama/llama-2-70b-chat, palm-2-codechat-bison, palm-2-chat-bison, gpt-3.5-turbo, gpt-3.5-turbo-16k, gpt-4, gpt-4-32k, claude-2, claude-instant-v1, llama-2-13b-chat, llama-2-70b-chat | OpenRouter |

| Ernie | ERNIE-Bot, ERNIE-Bot-turbo, BLOOMZ-7B, Llama-2 | Ernie |

| iFlytek | spark-v1.5, spark-v2.0 | iFlytek |

| ChatGLM | chatglm2-6b | ChatGLM |

| MiniMax | abab5-chat | MiniMax |

| 本地 | custom-model | Local Computer |

嵌入模型

| 模型 | 子类型 | 链接 |

|---|---|---|

| OpenAI | AdaSimilarity, BabbageSimilarity, CurieSimilarity, DavinciSimilarity, AdaSearchDocument, AdaSearchQuery, BabbageSearchDocument, BabbageSearchQuery, CurieSearchDocument, CurieSearchQuery, DavinciSearchDocument, DavinciSearchQuery, AdaCodeSearchCode, AdaCodeSearchText, BabbageCodeSearchCode, BabbageCodeSearchText, AdaEmbeddingV2 | OpenAI |

| Hugging Face | sentence-transformers/all-MiniLM-L6-v2 | Hugging Face |

| Cohere | embed-english-v2.0, embed-english-light-v2.0, embed-multilingual-v2.0 | Cohere |

| Ernie | 默认 | Ernie |

| 本地 | custom-embedding | Local Computer |